I’ve got a new project which I ought to blog about somewhere, and it’s related to file formats, so it’s going here.

There have been projects to archive information about filk songs. They’ve tended toward wikis such as the Filk Discography Wiki, which contains information about filk recordings. Many filk albums have gone out of publication and might otherwise be forgotten, and the wiki keeps them in the cultural memory. Wikis are fine, and they’re easy to participate in with little technical knowledge. They’re also fragile; if the hosting for a wiki goes away, it might find a new home, but it might disappear if no one takes prompt action.

Structured information has advantages. It’s easy for anyone with a little file storage to keep a copy and give it to others. People can create their own repositories, perhaps of songs which they have published. It’s easy to search them and extract information, e.g., all the songs by an author. This isn’t to say that we should abandon wikis, but having structured information as well strengthens the effort. With a little work, it can be fed to wikis.

This is why I’ve created the Argoknot project. It’s a Python-based project to process song data in JSON format. As of this post, it can do one thing: convert CSV files to JSON. I’m planning to add the ability to convert XML files that use the MODS schema. There is a pile of such files in the MASSFILC Filk Book Index.

One of the project’s aims is to create a JSON nomenclature for the filk community. That will let other projects work with the same JSON files to create websites, import into wikis, or do lots of other things.

What I’m doing here is just a start, and it won’t get far without the participation of others. I encourage others in the filk community to join the effort, whether working directly on Argoknot, offering suggestions on how to organize the data, or creating other coding projects.

UPDATE: I’ve enabled discussions on the project and posted an initial message inviting comments and suggestions. So please comment there rather than here if it’s OK with you.

Email migration

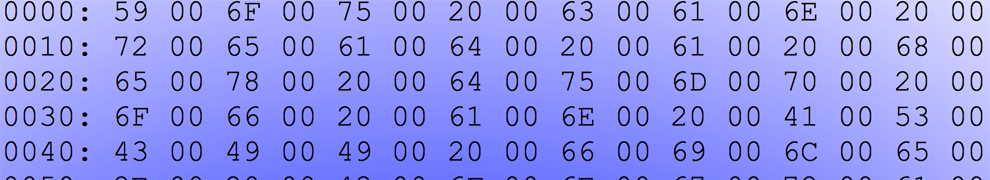

Recently I migrated my email to a new host and discovered a hazard I hadn’t known about before. I didn’t lose anything, but I came closer to it than I would have liked. Since it’s a personal digital preservation issue, it merits a rare post on this blog.

There are two widely used open protocols for email clients: POP3 and IMAP. The latter is far more widely used today, because it lets you synchronize your mailboxes across multiple devices. The downside is that your mail lives on the server and may or may not be saved on your client. If you change your mailbox to a new server, all your existing mail could disappear. As long as you haven’t terminated the old service, you should be able to get it back, but it could be a pain.

Continue reading →Comments Off on Email migration

Posted in commentary

Tagged email, preservation